Study reveals concerning cognitive effects of using ChatGPT for essay writing

MIT research shows LLM assistance reduces brain connectivity and memory retention in student essay writing tasks.

A comprehensive study from MIT Media Lab has revealed significant neurological and behavioral differences when students use Large Language Models (LLMs) like ChatGPT for essay writing compared to traditional research methods or working without assistance. The research, conducted over four months with 54 participants, was published as a preprint on June 10, 2025, by a team led by Nataliya Kosmyna from MIT Media Lab. The study divided participants into three groups: those using ChatGPT-4, those using Google search, and those writing without any external tools.

Summary

Who: MIT Media Lab researchers led by Nataliya Kosmyna conducted the study with 54 participants aged 18-39 from Boston-area universities.

What: A four-month investigation comparing brain activity and essay quality among users of ChatGPT, Google search, and no external tools, revealing reduced neural connectivity and memory retention in AI users.

When: The study was conducted over four months beginning in January 2025, with results published June 10, 2025.

Where: The research took place at MIT Media Lab with participants from MIT, Harvard, Wellesley, Tufts, and Northeastern universities.

Why: The study aimed to understand the cognitive costs of LLM usage in educational contexts as AI tools become increasingly prevalent in academic and professional settings.

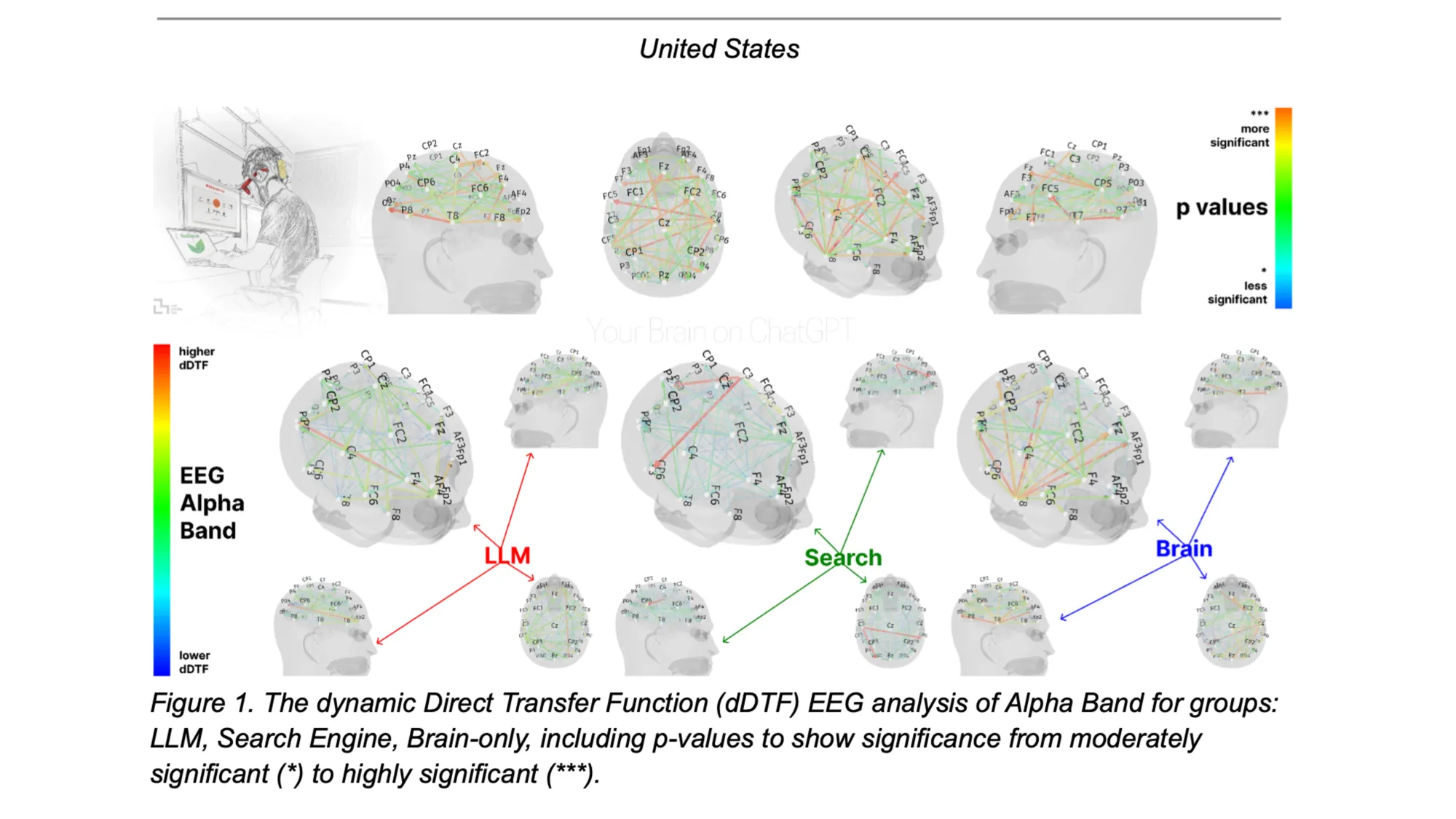

Using electroencephalography (EEG) to monitor brain activity across 32 regions, researchers discovered striking differences in neural connectivity patterns. Brain connectivity systematically scaled down with the amount of external support: the Brain‑only group exhibited the strongest, widest‑ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling.

The findings revealed measurable cognitive costs that accumulated over time. Researchers used an EEG to record the writers' brain activity across 32 regions, and found that of the three groups, ChatGPT users had the lowest brain engagement and "consistently underperformed at neural, linguistic, and behavioral levels".

Memory and comprehension severely impacted

One of the most concerning findings involved participants' ability to recall their own work. The study showed that 83% of LLM users failed to accurately quote from essays they had written just minutes earlier, compared to only 11% in both the search engine and brain-only groups. LLM users also struggled to accurately quote their own work.

English teachers who evaluated the essays without knowing which group produced them identified clear patterns. Two English teachers who assessed the essays called them largely "soulless", noting homogeneous structure and content among LLM-generated work.

Fourth session reveals lasting effects

In the study's fourth session, researchers switched participants between groups to test for lasting effects. Those who had previously used ChatGPT and were asked to write without assistance showed reduced neural connectivity and under-engagement of key brain networks. In session 4, LLM-to-Brain participants showed weaker neural connectivity and under-engagement of alpha and beta networks.

Conversely, participants who had initially written without assistance and then used LLM tools showed enhanced brain activity, suggesting that prior independent cognitive work provided protection against AI-induced cognitive decline.

Technical analysis reveals homogenization patterns

The study employed Natural Language Processing analysis to examine essay content patterns. Researchers found consistent homogeneity in Named Entity Recognition, n-grams, and topic ontology within each group. LLM users produced statistically similar essays with shared vocabulary and structural approaches, while brain-only participants showed significant individual variation in their writing approaches.

The research team also developed an AI judge to score essays alongside human teachers. Human evaluators demonstrated greater skepticism about uniqueness and content quality compared to the AI assessment system, highlighting the ability of experienced educators to identify AI-assisted work.

Implications for marketing and education sectors

This research carries significant implications for the marketing community, where content creation and critical analysis form core competencies. According to PPC Land's recent coverage, knowledge workers already report decreased cognitive effort when using AI tools, with 72% experiencing reduced effort for basic recall tasks.

The accumulation of what researchers term "cognitive debt" presents challenges for professionals who rely on analytical thinking and original content creation. As the educational impact of LLM use only begins to settle with the general population, in this study we demonstrate the pressing matter of a likely decrease in learning skills based on the results of our study.

The study's energy consumption analysis also revealed environmental considerations, with LLM queries consuming approximately 10 times more energy than traditional search queries, adding material costs to the cognitive ones identified.

Get the PPC Land newsletter ✉️ for more like this

Timeline

- January 2025: MIT research team begins four-month study with 54 participants

- March 2025: Fourth session conducted with 18 participants showing group-switching effects

- June 10, 2025: Study published as preprint on arXiv

- Recent: PPC Land analysis examines workplace impact of similar AI tools