Instagram adopts PG-13 ratings for teen content moderation

Meta implements PG-13 movie rating standards for Instagram Teen Accounts on October 14, 2025, filtering mature content for users under 18 across multiple platforms.

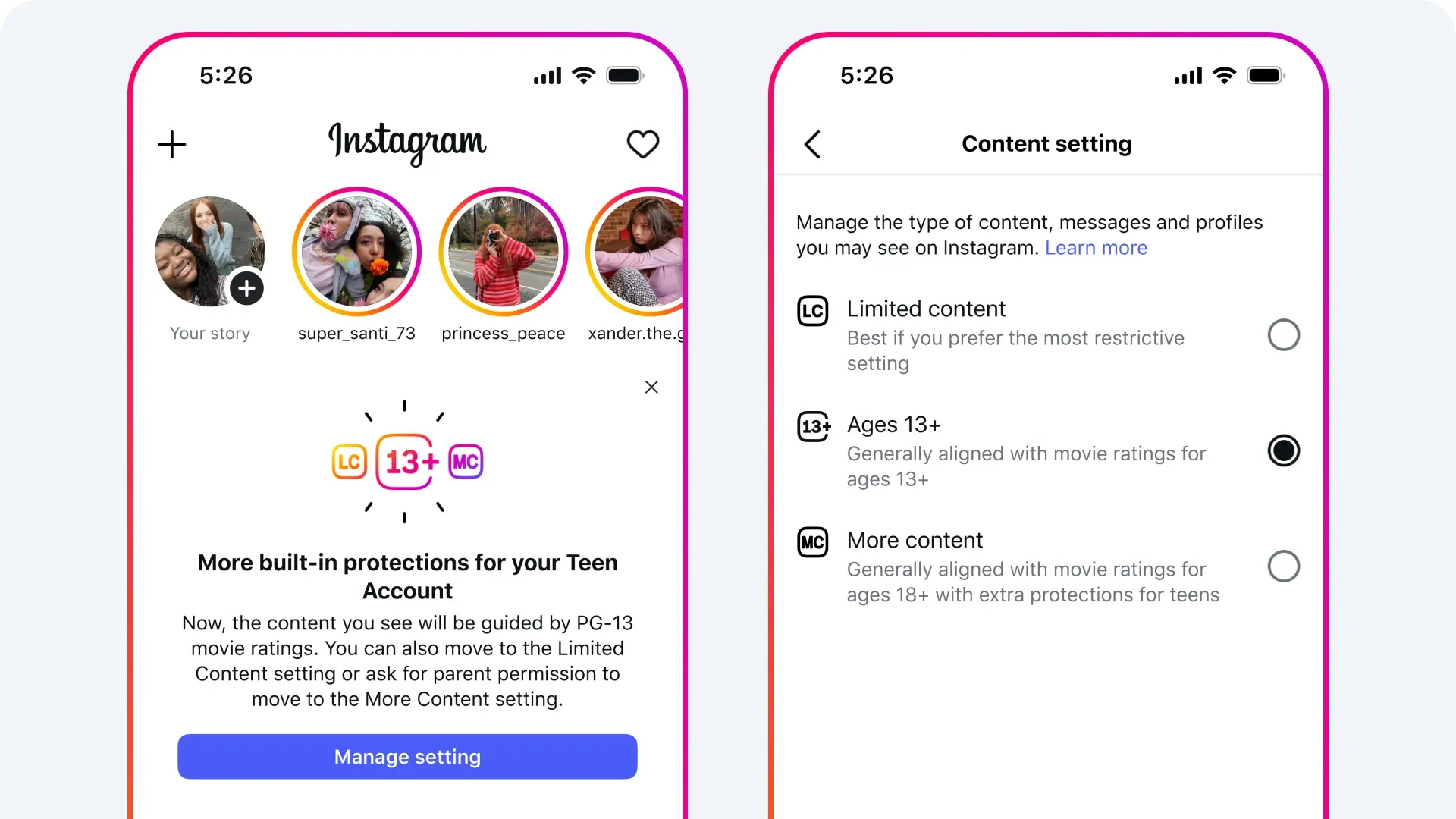

Instagram's Teen Accounts now operate under PG-13 movie rating standards, a significant shift in how the platform determines what teenage users can see. Meta announced the change on October 14, 2025, representing the most substantial modification since Teen Accounts launched in September 2024.

The stakes are high. With hundreds of millions of teenage users globally, Meta faces mounting regulatory pressure while simultaneously defending its advertising business model. The PG-13 framework attempts to split this difference—creating content restrictions parents recognize from movie theaters while maintaining enough flexibility to keep teens engaged on the platform.

Teens under 18 now automatically enter an updated 13+ content setting aligned with PG-13 movie guidelines. "This means that teens will see content on Instagram that's similar to what they'd see in a PG-13 movie," according to Meta's documentation. Opting out requires parental permission, a critical detail given that 97% of teens aged 13-15 have remained in default restrictions since the initial Teen Accounts launch.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The regulatory context

Meta's timing is deliberate. The company operates in an increasingly hostile regulatory environment where platforms face unprecedented scrutiny over youth safety. Google implemented immediate suspension enforcement for child protection violations starting October 22, 2025. New COPPA rules took effect June 23, 2025, fundamentally altering how digital platforms handle children's data collection.

The competitive landscape also matters. Google deployed machine learning age detection in July 2025 to automatically disable ad personalization for identified minors. Meta's approach differs—instead of purely technical age detection, the company leverages the familiar cultural touchstone of PG-13 ratings, something parents immediately understand without technical explanation.

The research backing the changes reveals Meta's cautious approach. The company collected over 3 million content ratings from thousands of parents globally. This extensive consultation serves dual purposes: genuinely informing policy decisions while creating defensible documentation should regulators question the adequacy of protections.

Technical implementation exposes platform mechanics

The updated content policies extend beyond Meta's existing restrictions on sexually suggestive content, graphic images, and adult products like tobacco or alcohol. New guidelines now hide or restrict posts containing strong language, certain risky stunts, and content potentially encouraging harmful behaviors such as marijuana paraphernalia displays.

Instagram's technology proactively identifies content violating updated guidelines across multiple platform areas. Teens cannot follow accounts regularly sharing age-inappropriate content. If teens already follow these accounts, they lose access to content, cannot send direct messages, and cannot see their comments elsewhere. This restriction operates bidirectionally—flagged accounts also cannot follow teens, message them, or comment on their posts.

Search functionality receives significant modifications. The platform blocks results for mature terms beyond previously restricted topics like suicide, self-harm, and eating disorders. Terms including "alcohol" and "gore" now return zero content results for teen accounts, even when misspelled. This represents a substantial expansion of content filtering that affects discoverability for legitimate content including health education and harm reduction information.

Content filtering prevents inappropriate material from appearing in recommendations, feeds, stories, or comments—even when shared by followed accounts. Links to restricted content sent through direct messages cannot be opened. AI experiences also operate under PG-13 guidelines, meaning "AIs should not give age-inappropriate responses that would feel out of place in a PG-13 movie."

September content reviews by parents in the US and UK showed fewer than 2% of posts recommended to teens were considered inappropriate by most parents. This statistic appears favorable but masks the massive scale involved—2% of content on a platform serving hundreds of millions of teens still represents millions of potentially inappropriate exposures.

The Limited Content option

Meta introduced a new "Limited Content" setting for families preferring stricter controls beyond PG-13 standards. This setting filters additional content and removes the ability to see, leave, or receive comments under posts. Starting in 2026, this setting will further restrict AI conversations teens can have on the platform.

A recent survey conducted by Ipsos and commissioned by Meta found 95% of US parents consider the updated settings helpful. The Limited Content option received appreciation from 96% of US parents surveyed, regardless of whether they choose to enable it. These impressive numbers require scrutiny—Meta commissioned the research, potentially influencing framing and interpretation.

The company implemented new feedback mechanisms for parents. The platform will run regular surveys inviting parents to review posts and confirm appropriateness for teens. Parents using supervision tools can now flag posts they believe should be hidden from teens, with submissions sent to Meta's teams for prioritized review.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Marketing implications reshape youth audience strategies

These changes significantly restrict how brands interact with younger demographics. Direct messaging capabilities face severe limitations, with teens under 16 only receiving messages from followed accounts or established connections. Cold outreach strategies targeting teen users become essentially impossible.

Content visibility for teen accounts is constrained by stricter algorithmic filtering and private account defaults. Brands targeting teen audiences must develop content satisfying both algorithmic safety parameters and parental approval mechanisms. The emphasis shifts toward family-oriented marketing approaches acknowledging increased parental oversight.

The modifications follow broader industry trends. Google tightened advertising rules to protect minors in January 2025, consolidating five distinct policies into a comprehensive framework. The regulatory convergence across major platforms creates consistent restrictions but also eliminates competitive advantages from lax enforcement.

Meta's removal of detailed targeting exclusions compounds these challenges. The January 2025 change eliminated advertisers' ability to exclude specific audiences, reducing precision in campaigns that must now navigate both reduced targeting capabilities and enhanced content restrictions for younger users.

Third-party verification services responded with enhanced brand safety controls. DoubleVerify launched 30 new avoidance categories in February 2025, including Youth and Young Adults classifications. These tools provide brands with additional filtering beyond platform-native systems, supporting compliance with industry-specific marketing guidelines.

The enforcement challenge

Meta acknowledges system imperfections. "Just like you might see some suggestive content or hear some strong language in a PG-13 movie, teens may occasionally see something like that on Instagram—but we're going to keep doing all we can to keep those instances as rare as possible," the announcement states.

This admission carries weight given Meta's recent content moderation challenges. The company ended its fact-checking program in April 2025, acknowledging that potentially 10-20% of enforcement actions were mistakes. The high error rate contributed to user frustration with account suspensions, content removals, and reduced distribution for posts that may not have violated policies.

The platform uses age prediction technology to place teens into content protections even when they claim adult status. "We know teens may try to avoid these restrictions," the documentation acknowledges. This predictive approach addresses circumvention attempts but introduces additional accuracy concerns—false positives restrict adults while false negatives expose actual teens to inappropriate content.

International implementations reveal varying approaches. India received teen safety features on Safer Internet Day 2025, with technical specifications including automated time-tracking systems and sleep mode activation between 10 PM and 7 AM. The geographic variation demonstrates how local regulatory environments shape platform policies.

The advertising ecosystem adapts

The Teen Account system has grown to serve hundreds of millions of users since September 2024. Meta expanded restrictions beyond Instagram in April 2025, announcing plans to extend protections to Facebook and Messenger. This cross-platform approach aims for consistent safety environments across Meta's ecosystem while simplifying compliance for content creators and advertisers.

Since September 2024, at least 54 million active Teen Accounts operate globally, with continued rollout expansion planned. The updated content settings began rolling out gradually to Teen Accounts in the US, UK, Australia, and Canada on October 14, 2025, with full implementation expected by year-end. Global expansion follows initial implementation.

For marketing professionals, the changes necessitate strategic rethinking of youth marketing approaches. Brands must develop content that satisfies algorithmic safety parameters while acknowledging that distribution penalties for inappropriate content may differ from previous enforcement mechanisms. The shift emphasizes value-driven content that parents and teens jointly approve rather than direct-to-teen marketing tactics.

Industry experts suggest these restrictions accelerate trends toward family-oriented marketing approaches acknowledging increased parental oversight now embedded in Meta's platforms. The competitive pressure extends beyond Meta—Google's privacy chief criticized Meta's age verification approach in June 2025, warning that app store-based systems create unnecessary privacy risks for children.

The unresolved questions

The PG-13 framework raises fundamental questions about applying movie ratings to social media content. Movies undergo classification by industry boards evaluating complete works with narrative context. Social media content fragments into individual posts lacking overarching narrative frameworks. How does a meme referencing strong language compare to dialogue in a PG-13 film? The documentation provides no clear answers.

The economic incentives driving content creation complicate enforcement. Platform monetization programs fuel mass AI content production, with creators exploiting generative AI tools to produce engagement-optimized material. Meta's Creator Bonus Program creates financial motivations for content designed to bypass filters while maximizing reach.

International regulatory divergence creates compliance complexities. The European Union's Digital Services Act establishes different requirements than US frameworks, forcing platforms to maintain multiple content moderation systems. These geographic variations in teen protection standards reflect cultural differences in acceptable content for minors.

Meta's announcement acknowledges ongoing system improvements based on feedback and research. This commitment to iteration signals that the PG-13 framework represents an initial implementation subject to refinement. The company's willingness to adjust policies based on performance data suggests a pragmatic approach—but also reveals that the current system remains experimental.

The ultimate test comes from sustained enforcement rather than policy announcements. Meta's track record includes previous youth safety initiatives that failed implementation challenges. The PG-13 framework's success depends on technical systems accurately identifying restricted content, age prediction algorithms correctly classifying users, and moderation teams handling edge cases consistently.

For now, Instagram's teen users navigate a platform shaped by movie rating standards originally designed for theatrical exhibition. Whether this cinematic lens proves effective for social media content filtering remains an open question—one that hundreds of millions of teenage users will answer through their daily platform experiences.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- September 17, 2024: Instagram launches Teen Accounts with built-in protections for users under 18

- January 26, 2025: Google consolidates advertising rules for minor protection across platforms

- January 26, 2025: Meta removes detailed targeting exclusions affecting audience controls

- February 11, 2025: Instagram expands teen safety features to India with automatic restrictions

- April 8, 2025: Meta announces enhanced Teen Account restrictions and expansion to Facebook and Messenger

- June 23, 2025: New COPPA rules take effect with major advertising changes

- July 30, 2025: Google begins machine learning age detection for ad protections

- October 14, 2025: Meta announces PG-13 rating alignment for Instagram Teen Accounts

- October 22, 2025: Google implements immediate suspension enforcement for child protection violations

- End of 2025: Expected full rollout completion in US, UK, Australia, and Canada

- 2026: Limited Content setting to restrict AI conversations for teens

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Meta's Instagram platform implemented changes affecting hundreds of millions of teen users under 18 globally, with particular focus on users under 16 requiring parental permission for setting modifications. The company collected feedback from thousands of parents worldwide and received over 3 million content ratings to inform the policy changes. Marketing professionals targeting teen audiences must now navigate significantly restricted interaction capabilities.

What: Instagram Teen Accounts now use PG-13 movie rating standards to determine content appropriateness, automatically hiding posts with strong language, risky stunts, and potentially harmful content including marijuana paraphernalia. The platform introduced a stricter "Limited Content" option that filters additional content and removes commenting capabilities, with planned AI conversation restrictions in 2026. Technical implementation includes blocking accounts sharing age-inappropriate content, restricting search results for mature terms even when misspelled, and preventing inappropriate content from appearing in recommendations, feeds, stories, or comments. AI experiences on the platform also operate under PG-13 guidelines to avoid age-inappropriate responses.

When: Meta announced the changes on October 14, 2025, with gradual rollout beginning the same day in the US, UK, Australia, and Canada. Full implementation is expected by year-end, representing the most significant update since Teen Accounts launched in September 2024. Global expansion is planned following initial implementation, with the Limited Content AI restrictions scheduled for 2026. The announcement follows months of research conducted throughout 2024 and early 2025, during which Meta refined age-appropriate guidelines through extensive parent feedback.

Where: Initial rollout covers the United States, United Kingdom, Australia, and Canada, with global expansion planned following initial implementation. The system currently serves at least 54 million active Teen Accounts globally since the September 2024 launch. Meta plans to apply similar protections to Facebook for additional teen safeguards, creating a unified approach across its platform ecosystem. The changes operate across all Instagram features including Feed, Stories, Reels, Search, Direct Messages, and AI interactions.

Why: Meta faces mounting regulatory pressure from multiple jurisdictions implementing stricter youth protection requirements, including new COPPA rules that took effect June 2025 and Google's deployment of machine learning age detection in July 2025. The company collected over 3 million content ratings from thousands of parents worldwide to refine age-appropriate guidelines, responding to feedback showing 95% of US parents found updated settings helpful. The PG-13 framework provides parents with familiar cultural touchstones from movie ratings while maintaining platform engagement for teenage users. Meta acknowledges that no system is perfect and commits to continuous improvement, recognizing that teens may occasionally see inappropriate content similar to PG-13 movies. The changes also address concerns about age misrepresentation by using age prediction technology to place teens into content protections even when they claim adult status. For marketing professionals, the restrictions necessitate fundamental shifts toward family-oriented approaches that satisfy both algorithmic safety parameters and parental approval mechanisms, as direct teen targeting becomes increasingly difficult across Meta's platforms.