Google's AI Mode enables visual search exploration

Google introduced visual search capabilities to AI Mode on September 30, allowing users to explore and shop using conversational queries combined with image results in the United States.

The search company announced on September 30 that AI Mode in Google Search can now process natural language questions and return visual results that users can refine through ongoing conversation. The feature addresses scenarios where verbal descriptions prove inadequate for communicating visual concepts, from interior design aesthetics to specific clothing styles.

Users can input queries conversationally and receive collections of images matching their descriptions. Each result includes clickable links for additional information. The system accepts text descriptions, uploaded images, or photos taken directly through the interface. On mobile devices, users can search within specific images and ask follow-up questions about visible elements.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Technical architecture

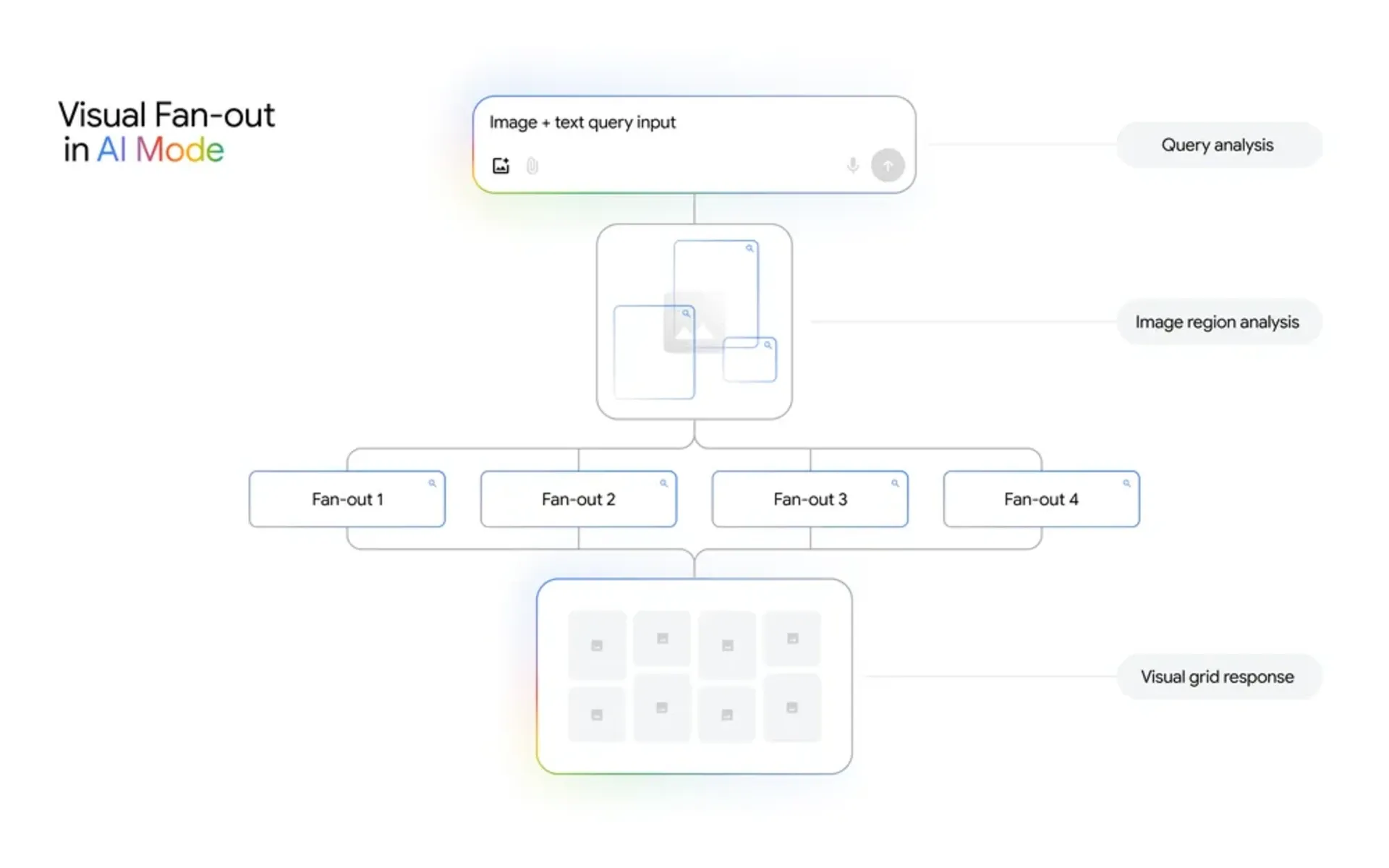

The capability relies on what Google calls "visual search fan-out" technique. According to the announcement, this method performs comprehensive image analysis by identifying primary subjects alongside subtle details and secondary objects. The system executes multiple queries simultaneously in background processes.

The technology integrates Google Search's existing Lens and Image search functionality with Gemini 2.5's multimodal and language processing capabilities. The implementation extends Google's query fan-out approach specifically for visual understanding. When processing uploaded or captured images, the system analyzes visual content while interpreting natural language queries. Visual search queries grew 65% year-over-year according to data from July 2025, indicating substantial adoption of image-based search methods.

Google's Gemini 2.5 model processes multiple data types simultaneously through what the company describes as native multimodal design. Text, images, video, and audio convert into token representations during training. The September announcement builds on Gemini's multimodal capabilities detailed in July, which demonstrated enhanced video understanding and spatial reasoning integration.

Shopping functionality

The update includes shopping features that process natural language product descriptions. Users can describe items like "barrel jeans that aren't too baggy" without navigating traditional filter systems for style, rise, color, size, or brand attributes. Follow-up refinements work through additional statements such as "I want more ankle length."

Google's Shopping Graph, containing more than 50 billion product listings, powers these results. According to the company, over 2 billion listings receive updates every hour. The database covers products from major retailers and local shops, presenting reviews, deals, colorways, and availability information.

Each shopping result connects to retailer websites for purchases. The Shopping Graph spans stores worldwide, according to Google's statement. The hourly update frequency for 2 billion listings represents substantial infrastructure for maintaining current product information.

Shopping integration has expanded across multiple platforms since October 2024, when Google Lens added Shopping ads with nearly 20 billion monthly visual searches. The September AI Mode update extends these capabilities into conversational search contexts.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Market positioning

The timing follows several visual commerce developments. Amazon launched Lens Live on September 2, offering real-time product scanning with AI assistant integration. Pinterest introduced Top of Search ads on September 25 for visual shopping behavior. Google launched Search Live on September 24, enabling voice and camera queries for all U.S. users.

Search behavior patterns continue shifting toward visual inputs. ChatGPT improved shopping intent detection on September 16, demonstrating that conversational AI platforms prioritize commercial queries. Google maintained 87% global search market share in Q1 2025 according to Cloudflare data, though AI-powered alternatives show growth.

The feature arrived one day after Google updated its logo design on September 29, when the company refreshed visual elements across its product ecosystem. That update came approximately five months after Google introduced gradient effects to its primary "G" logo in May 2025.

Implementation details

The multimodal approach allows multiple entry points: text descriptions, uploaded images, or direct photos through the interface. Each method feeds into the same visual understanding system. Query fan-out technique creates multiple simultaneous searches from single user inputs, differing from traditional sequential refinement where users manually adjust queries one parameter at a time.

The system's recognition of secondary objects and subtle details indicates computer vision capabilities beyond basic object detection. Processing these elements while maintaining search speed requires computational resources at scale.

Mobile functionality includes the ability to search within images, responding to mobile devices serving as primary search tools for many users, particularly in shopping contexts. This extends beyond desktop capabilities.

The Shopping Graph's 50 billion listings with 2 billion hourly updates indicates technical requirements for maintaining current product information across global retail markets. The scale suggests substantial investment in infrastructure.

Marketing implications

For marketers and advertisers, the update changes how products appear in search results. Natural language descriptions could surface products that rank differently in traditional keyword-based searches. A user describing "barrel jeans that aren't too baggy" might see different results than someone searching "barrel jeans."

Product listing optimization may need to account for conversational patterns. Descriptions matching natural language queries could become more valuable than those optimized solely for keyword matching. The Shopping Graph's hourly updates create pressure on retailers to maintain current product information. Outdated inventory, pricing, or availability data could result in lower visibility as the system prioritizes fresh listings.

Visual content quality takes on greater importance when images serve as search entry points. Product photography, lifestyle images, and visual presentation become direct factors in search visibility rather than supporting elements. Google's statement about showing stores "from major retailers to local shops" suggests broad inclusion, though actual distribution of visibility between large and small retailers remains to be determined through usage.

Google introduced comprehensive AI advertising tools at Think Week 2025 in mid-September, including loyalty program integration and shoppable video formats. The AI Mode visual search update extends these capabilities into organic search contexts, blurring lines between paid and organic discovery.

Availability parameters

The feature launched this week in English for users in the United States. Google announced the rollout on September 30, making it available nationwide. The announcement specified English language only and U.S. geographic restriction.

The three-minute read time listed in Google's announcement suggests complexity in underlying systems despite simple user interfaces. Building natural-feeling interactions requires substantial backend processing invisible to users.

AI Mode expanded to five new languages on September 8, adding Hindi, Indonesian, Japanese, Korean, and Portuguese support. The September 30 visual search update launched only in English initially, suggesting separate development and rollout timelines for visual capabilities versus language expansion.

Search behavior studies indicate fundamental shifts in user expectations. AI Overviews now serve 1.5 billion users monthly, according to July 2025 data. Traditional SEO approaches focused on ranking individual pages become less relevant as AI systems synthesize information from multiple sources.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- May 2025: Google updated its primary "G" logo with gradient effects for the first time in nearly 10 years

- July 23, 2025: Google reported 65% year-over-year growth in visual searching and revealed AI Overviews drive 10% search growth

- September 2, 2025: Amazon announced Lens Live with real-time scanning and Rufus AI integration for tens of millions of U.S. customers

- September 8, 2025: Google expanded AI Mode to five new languages including Hindi, Indonesian, Japanese, Korean, and Portuguese worldwide

- September 16, 2025: ChatGPT enhanced search with accuracy improvements and shopping detection capabilities

- September 24, 2025: Google launched Search Live for voice and camera queries for all U.S. users

- September 25, 2025: Pinterest launched Top of Search ads for visual shopping transformation

- September 29, 2025: Google updated its logo design with new visual elements

- September 30, 2025: Google announced AI Mode visual search capabilities, launching in English in the United States

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Google, specifically its Search and Consumer Shopping product teams, launched the update. The announcement came from Robby Stein, VP of Product for Google Search, and Lilian Rincon, VP of Product for Consumer Shopping.

What: A major update to AI Mode in Google Search enabling visual exploration through conversational queries. The feature combines natural language processing with visual search results, powered by Gemini 2.5 and Google's Shopping Graph containing over 50 billion product listings. Users can describe what they're seeking conversationally or upload images, then refine results through follow-up queries. The system uses visual search fan-out technique to analyze images comprehensively and run multiple background queries simultaneously.

When: Google announced the feature on September 30, 2025, with rollout beginning that week. The Shopping Graph updates over 2 billion product listings hourly to maintain current information.

Where: The feature launched in English in the United States. It works across devices, with additional mobile-specific functionality for searching within images. The Shopping Graph covers stores worldwide, from major retailers to local shops.

Why: The update addresses situations where describing visual concepts through words proves difficult. Google designed the feature for scenarios ranging from finding design inspiration to shopping for specific products. The conversational approach eliminates manual filter navigation, while Shopping Graph integration connects visual search directly to purchase opportunities. For Google, the feature represents an extension of existing search capabilities into more natural interaction patterns, potentially capturing queries that traditional text-based search handles poorly.