Google updates prohibited use policy for generative AI with clearer guidelines

New policy changes focus on security, privacy and content restrictions while allowing exceptions for educational and research use.

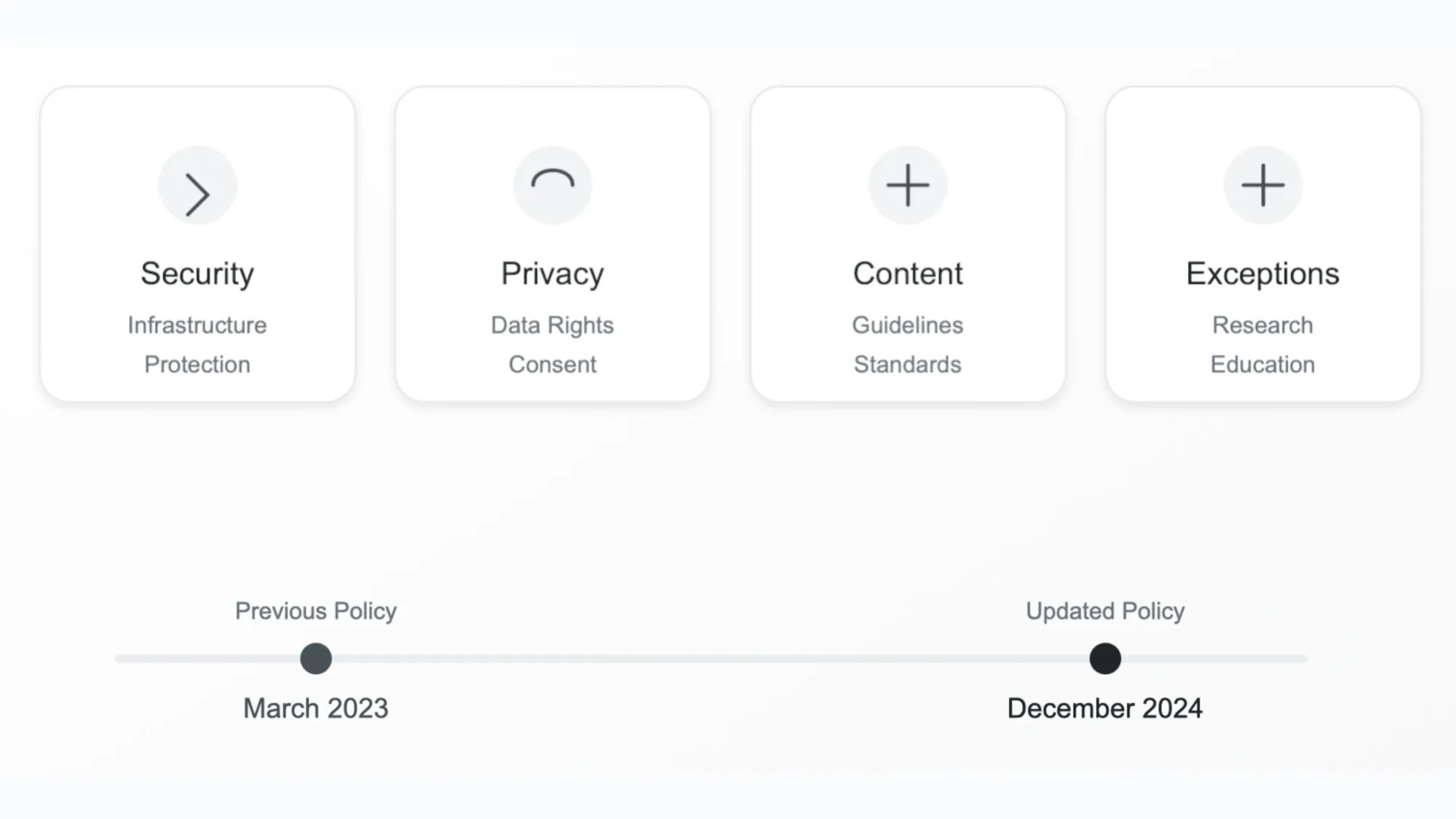

Ten days ago, on December 17, 2024, Google announced significant updates to its Generative AI Prohibited Use Policy, marking the first comprehensive revision since March 2023. According to the official announcement, the modifications focus on clarifying existing rules rather than introducing new restrictions.

The updated policy document, spanning multiple sections, outlines specific prohibited behaviors and introduces allowances for certain academic and research applications. A comparative analysis of the previous and current versions reveals substantial changes in structure and language precision.

Major structural changes reorganize the policy into four main categories: dangerous or illegal activities, security compromises, explicit content, and misinformation. The document maintains strict prohibitions on generating content related to child exploitation, violent extremism, and non-consensual intimate imagery.

Security measures received particular attention in the revision. According to the policy text, users are explicitly forbidden from employing generative AI tools to facilitate "spam, phishing, or malware." The document specifically addresses attempts to circumvent safety filters or manipulate AI models to contravene established guidelines.

Privacy protections feature prominently in the updated framework. The policy prohibits tracking or monitoring individuals without consent and places restrictions on automated decision-making in high-risk domains. These domains specifically include "employment, healthcare, finance, legal, housing, insurance, or social welfare," as stated in the policy document.

Content restrictions maintain firm boundaries against sexually explicit material, with specific language prohibiting "content created for the purpose of pornography or sexual gratification." The policy distinguishes this from potentially acceptable content created for "scientific, educational, documentary or artistic purposes."

Misrepresentation and misinformation receive detailed treatment in the revised document. The policy explicitly prohibits impersonating individuals without disclosure and making misleading claims about expertise in sensitive areas such as health, finance, government services, or law.

A significant addition to the policy framework introduces exceptions for specific use cases. According to the document, Google may permit certain activities that would otherwise violate the guidelines when conducted for "educational, documentary, scientific, or artistic considerations." The policy stipulates that such exceptions apply only when potential benefits to the public outweigh associated risks.

The document maintains strong positions against harmful applications while acknowledging legitimate research needs. Prohibited activities include generating content that facilitates self-harm, promotes hatred, or enables harassment and bullying. The policy specifically addresses the manipulation of democratic processes, with explicit restrictions on misleading claims related to governmental procedures.

Technical specifications in the policy outline restrictions on automated decision-making systems. The document requires human supervision for AI applications that could materially impact individual rights, particularly in sensitive sectors such as healthcare and finance.

Implementation of the policy takes effect immediately, as confirmed in the announcement. The revisions maintain continuity with previous enforcement mechanisms while providing clearer guidance on acceptable use parameters.

Transparency measures in the updated policy require explicit disclosure when AI-generated content interfaces with human-created materials. The document specifically prohibits misrepresenting "the provenance of generated content by claiming it was created solely by a human."

Infrastructure protection receives dedicated attention in the security section. The policy prohibits actions that could compromise Google's services or those of other providers, with specific language addressing potential disruptions to technical infrastructure.

The policy framework demonstrates increased emphasis on precise definitions and explicit examples, moving away from broader categorical prohibitions. This approach aims to reduce ambiguity while maintaining comprehensive coverage of potential misuse scenarios.