Google CEO addresses AI doom scenarios with "self-modulating" safety theory

Sundar Pichai believes humanity's coordination response creates protective feedback loop against catastrophic AI risks.

Sundar Pichai, CEO of Google and Alphabet, has presented a nuanced perspective on artificial intelligence existential risks, arguing that humanity's natural tendency to coordinate in the face of threats creates a "self-modulating" safety mechanism against AI-related catastrophes. In a comprehensive interview published three days ago on June 5, 2025, Pichai addressed the concept of p(doom) - the probability that advanced AI could lead to human extinction or civilizational collapse.

Get the PPC Land newsletter ✉️ for more like this

The Google executive acknowledged that "the underlying risk is actually pretty high" when discussing potential AI catastrophes. However, his outlook remains fundamentally optimistic due to what he describes as humanity's historical pattern of rising to meet existential challenges through unprecedented coordination and cooperation.

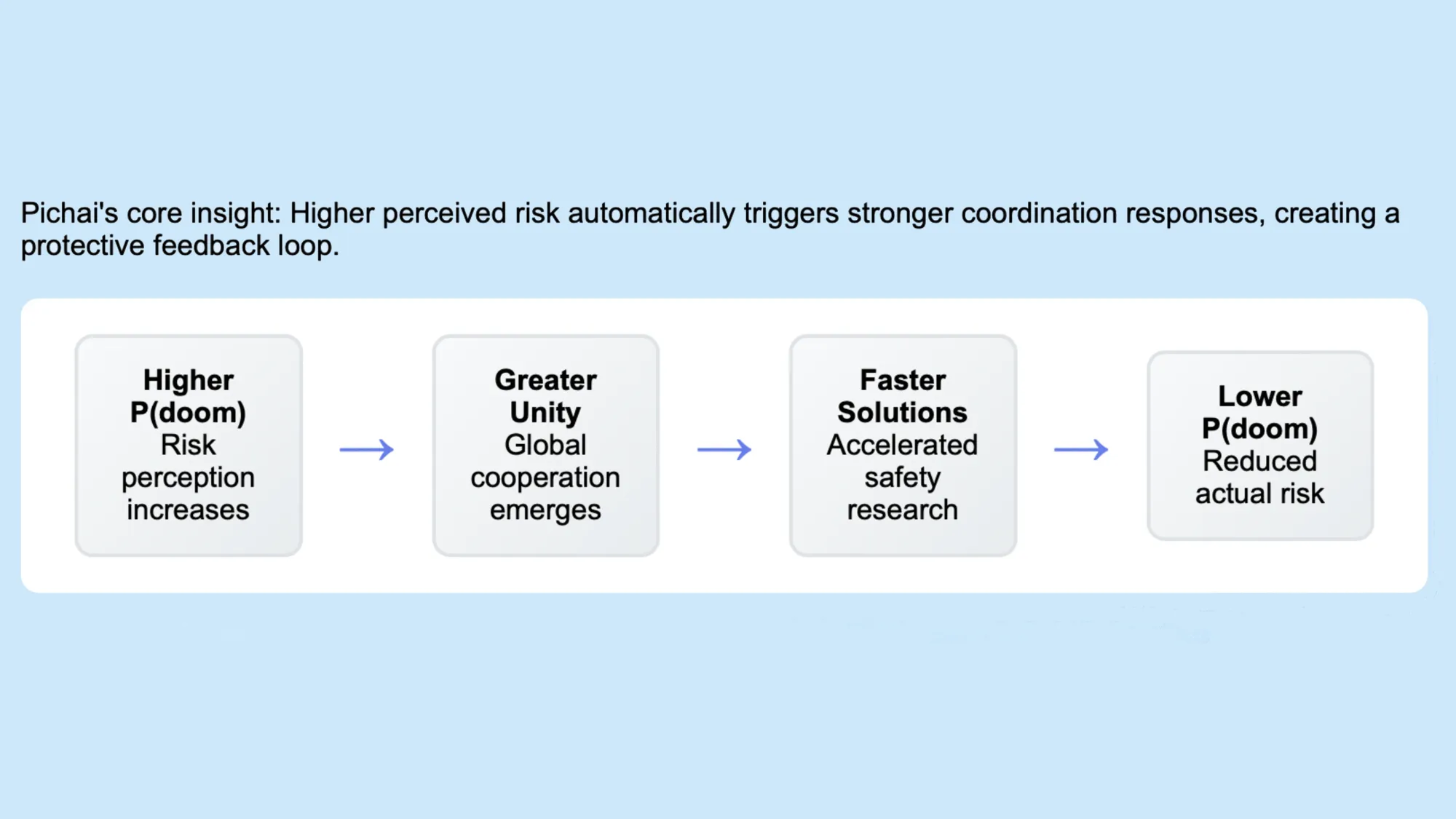

According to Pichai, the relationship between AI risk perception and human response creates a dynamic feedback loop that becomes more protective as threats appear more serious. This perspective challenges static risk assessments that treat doom probability as a fixed calculation, instead proposing that risk perception itself becomes a powerful mitigating factor.

The self-modulating safety mechanism explained

Pichai's central thesis rests on an observation about human behavior under threat conditions. When societies perceive existential risks as sufficiently high, they demonstrate remarkable abilities to transcend normal political, economic, and cultural divisions to address shared challenges. This coordination capability, according to Pichai, creates what he terms a "self-modulating aspect" to AI safety.

The mechanism operates through four interconnected stages that build upon each other as risk perception intensifies. First, higher perceived doom probability triggers greater awareness and concern across global populations. This heightened awareness then facilitates unprecedented levels of international cooperation as traditional barriers to coordination dissolve under existential pressure.

Enhanced cooperation subsequently accelerates safety research and implementation as resources, expertise, and political will align toward addressing the threat. Finally, these accelerated safety measures reduce actual risk levels, even as AI capabilities continue advancing. The cycle creates a protective feedback loop where increased risk perception paradoxically leads to decreased actual risk through coordinated human response.

This framework differs significantly from traditional risk models that assume static threat levels and fixed response capabilities. Instead, Pichai proposes that both threat assessment and response capacity evolve dynamically based on changing circumstances and perceptions.

Historical precedents for existential coordination

Pichai's optimism draws support from historical examples of humanity coordinating effectively against global threats. The Montreal Protocol's success in addressing ozone depletion demonstrates how quickly international cooperation can emerge when scientific consensus identifies clear existential risks. Similarly, the rapid development and global distribution of COVID-19 vaccines showed unprecedented coordination in medical research and manufacturing.

Climate change responses, while incomplete, illustrate both the challenges and possibilities of global coordination. Despite political disagreements about specific policies, the fundamental scientific consensus about climate risks has produced substantial international frameworks, technology sharing agreements, and coordinated research efforts that would have been impossible without shared threat perception.

Nuclear weapons treaties provide another relevant example. The Cuban Missile Crisis and subsequent arms control agreements emerged precisely because both superpowers recognized that mutual annihilation represented an unacceptable outcome. The existential nature of nuclear threats motivated cooperation that transcended ideological differences and geopolitical competition.

These historical precedents suggest that humanity possesses latent coordination capabilities that activate under sufficient existential pressure. Pichai argues that similar mechanisms would likely emerge as AI capabilities approach potentially dangerous thresholds.

Current AI risk landscape and coordination signals

The contemporary AI development environment already shows early signs of the coordination patterns that Pichai describes. Major AI laboratories have begun sharing safety research despite competitive pressures. OpenAI's decision to delay GPT-4 release for additional safety testing, Google's establishment of AI ethics boards, and Anthropic's focus on constitutional AI methods all reflect growing safety consciousness within the industry.

Government responses similarly demonstrate emerging coordination mechanisms. The European Union's AI Act represents the first comprehensive regulatory framework for artificial intelligence systems. The United States has established AI safety institutes and executive orders addressing AI governance. China has announced AI development principles that emphasize safety and social benefit alongside technological advancement.

International forums increasingly address AI coordination challenges. The Global Partnership on AI, the Partnership on AI, and various United Nations initiatives create institutional frameworks for ongoing cooperation. While these efforts remain preliminary, they establish precedents and relationships that could scale rapidly under increased threat perception.

Academic research communities have also shown remarkable coordination around AI safety challenges. The emergence of AI alignment as a legitimate research field, the growth of organizations like the Center for AI Safety, and the increasing integration of safety considerations into mainstream AI research all suggest that scientific communities are already responding to perceived risks.

Technical challenges requiring coordinated solutions

The specific nature of AI development creates technical challenges that particularly benefit from coordinated approaches. Unlike nuclear weapons, which require rare materials and specialized facilities, AI systems can potentially be developed by smaller teams with increasingly accessible computational resources. This democratization of AI development makes coordination both more necessary and more challenging.

Monitoring AI capability development requires sophisticated technical infrastructure that benefits from shared resources and expertise. No single organization or government possesses complete visibility into global AI development activities. Effective monitoring systems would require unprecedented information sharing and technical cooperation among institutions that typically guard proprietary information carefully.

Safety research itself presents coordination challenges that mirror other scientific endeavors addressing global risks. Understanding AI alignment problems requires diverse expertise spanning computer science, cognitive science, philosophy, and social sciences. The complexity of these challenges benefits from collaborative research approaches that pool expertise and resources across institutional boundaries.

International standards for AI development and deployment create additional coordination requirements. Technical standards for safety testing, capability assessment, and risk evaluation must be developed cooperatively to ensure compatibility and effectiveness across different AI development efforts worldwide.

Timeline projections and critical decision points

Pichai's interview revealed his expectation that artificial general intelligence will arrive shortly after 2030, describing current AI systems as being in an "Artificial Jagged Intelligence" phase where capabilities are highly uneven across different domains. This timeline creates specific windows for implementing coordination mechanisms before AI systems reach potentially dangerous capability levels.

The period between 2025 and 2028 likely represents a critical window for establishing effective coordination frameworks. During this timeframe, AI capabilities will continue advancing rapidly while remaining sufficiently limited that coordination efforts can be implemented and tested before reaching potentially irreversible decision points.

International governance structures typically require years to negotiate and implement. The complexity of AI governance suggests that effective frameworks must begin development well before they become strictly necessary. This lead time requirement creates urgency around current coordination efforts, even while AI systems remain in relatively safe capability ranges.

Technical safety research similarly requires substantial development timelines. Understanding AI alignment problems and developing robust safety measures will likely require sustained research efforts over multiple years. The coordination mechanisms that Pichai describes must therefore activate soon enough to enable adequate safety research before advanced AI systems are deployed.

Implications for marketing and business strategy

The coordination mechanisms that Pichai describes carry significant implications for business strategy and marketing approaches. Companies operating in AI-adjacent industries should anticipate substantial changes in regulatory environments, public expectations, and competitive dynamics as coordination efforts intensify.

Marketing strategies must account for evolving public attitudes toward AI development and deployment. As risk awareness increases, consumer preferences may shift toward companies that demonstrate clear commitments to AI safety and responsible development practices. Brand positioning around AI safety could become increasingly important competitive differentiators.

Regulatory compliance requirements will likely expand substantially as coordination mechanisms mature. Companies should prepare for more stringent AI governance requirements, mandatory safety testing protocols, and potential restrictions on certain AI applications or development approaches.

Supply chain considerations may also evolve as coordination efforts address AI development resources and infrastructure. International cooperation on AI governance could affect access to computational resources, specialized hardware, and technical expertise in ways that reshape industry structure and competitive dynamics.

Global cooperation challenges and resistance factors

Despite Pichai's optimism about humanity's coordination capabilities, substantial challenges could impede effective responses to AI risks. Geopolitical competition creates incentives for accelerated AI development that may conflict with safety considerations. Nations may resist coordination mechanisms that appear to advantage competitors or constrain their technological sovereignty.

Economic interests similarly create resistance to coordination efforts that might slow AI development or impose additional costs on AI companies. The substantial financial investments in AI development create pressure for rapid deployment and commercialization that may conflict with careful safety testing and gradual capability scaling.

Technical disagreements about AI risks themselves present coordination challenges. Without clear scientific consensus about specific risk mechanisms and timelines, building political support for coordination efforts becomes more difficult. Different technical communities may assess risks differently, complicating efforts to establish shared threat perceptions.

Cultural and ideological differences regarding technology governance, individual privacy, and appropriate government roles in technology development could impede international cooperation even when threat perceptions align. These deeper philosophical differences may prove more difficult to overcome than technical disagreements.

The marketing community's role in AI coordination

Marketing professionals occupy unique positions to influence public understanding and acceptance of AI coordination efforts. Clear communication about AI capabilities, limitations, and safety measures helps build the informed public discourse that effective coordination requires.

Brand messaging around AI development and deployment shapes public expectations and preferences in ways that can support or undermine coordination efforts. Companies that emphasize safety, transparency, and responsible development help normalize these values within industry culture and consumer expectations.

Educational content marketing can contribute to public understanding of AI risks and coordination mechanisms. Well-designed educational campaigns help build the informed public opinion that democratic coordination processes require while avoiding both excessive fear and dangerous complacency.

Industry leadership in establishing voluntary safety standards and best practices can accelerate coordination efforts by demonstrating feasible approaches to responsible AI development. Marketing strategies that highlight these leadership positions can create competitive advantages while supporting broader coordination goals.

Timeline

March 2025: The European Commission initiated proceedings against Google regarding potential Digital Markets Act violations related to AI Overviews, demonstrating early regulatory responses to AI integration in core internet services.

April 2025: Google expanded multimodal AI capabilities in search while implementing enhanced privacy protections, showing how safety considerations are being integrated into product development cycles.

May 2025: The company deployed AI-powered scam protection across multiple platforms, illustrating how AI safety research addresses immediate consumer protection needs alongside longer-term existential risks.

June 2025: Pichai's comprehensive interview provided the most detailed public explanation of Google's perspective on AI coordination mechanisms and safety timelines.

The trajectory of these developments suggests that coordination mechanisms are already emerging across regulatory, technical, and business domains. The pattern aligns with Pichai's theory that risk perception drives coordination responses, as each advancement in AI capabilities triggers corresponding safety and governance measures.

Understanding these emerging coordination patterns helps marketing professionals anticipate and prepare for the substantial changes that AI development will bring to business environments, consumer expectations, and regulatory frameworks over the coming years. The self-modulating safety mechanism that Pichai describes may prove to be one of the most important factors shaping the AI landscape as capabilities continue advancing toward artificial general intelligence.