Former Google product manager abandons AI healthcare apps over safety concerns

Former Google Bard product manager abandons nine-month healthcare AI project citing patient safety risks and technical limitations.

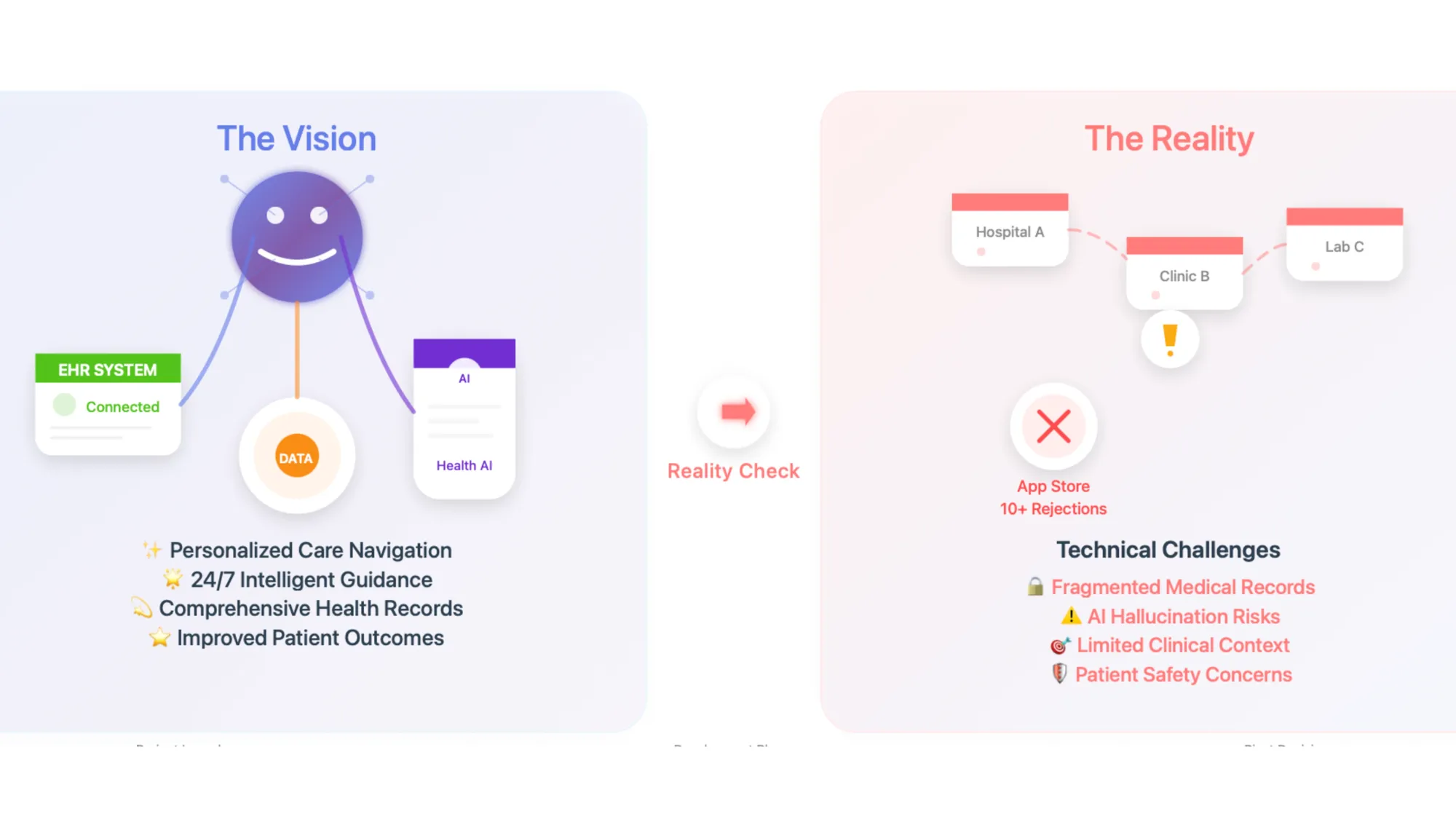

A former Google product manager who helped build the company's Bard AI system has abandoned his nine-month effort to create AI-powered healthcare applications for cancer patients, citing fundamental safety concerns and technical limitations that he believes cannot be resolved by current large language model technology.

Get the PPC Land newsletter ✉️ for more like this

Amir Kiani, who led Google Search integration for Google Bard and previously worked as a product manager across multiple Google AI initiatives, announced his decision to pivot away from clinical AI applications on June 6, 2025, after developing multiple prototypes designed to help cancer patients navigate their care.

According to Kiani's detailed technical analysis published on his personal blog, his research and development efforts spanned nine months and included building chatbots with electronic health record integrations, appointment preparation tools, and iOS applications that Apple rejected more than ten times. His work represented one of the most comprehensive attempts by a former major tech company AI leader to create patient-facing clinical applications using current LLM technology.

Yield: How Google Bought, Built, and Bullied Its Way to Advertising Dominance

A deeply researched insider’s account of Google’s epic two-decade campaign to dominate online advertising by any means necessary.

The announcement carries particular significance given Kiani's technical background and direct experience with enterprise AI development. His professional history includes serving as the first full-time software engineer at genomics startup Bina Technologies, which was later acquired by pharmaceutical giant Roche, and conducting graduate research at Stanford University under Andrew Ng that resulted in publications in Nature Digital Medicine.

Kiani's decision stems from what he identifies as three fundamental technical barriers that current AI systems cannot overcome for safe clinical use. The first involves what he terms "impossible context building," referring to the fragmented nature of medical records across different healthcare systems and providers. According to his analysis, even comprehensive data integration through FHIR interfaces and Apple's HealthKit cannot provide the complete medical picture necessary for safe clinical recommendations.

"The fragmentation is staggering," Kiani wrote in his technical analysis. "Each provider uses different systems, different formats, different standards. Even within the same hospital network, data might be siloed across departments." This fragmentation means that AI systems consistently operate with incomplete information, creating potentially dangerous gaps in clinical reasoning.

The second technical challenge Kiani identifies involves "impossible grounding," referring to AI systems' tendency to generate confident-sounding but inaccurate responses when working with incomplete medical information. According to his testing across multiple prototype applications, current LLM technology cannot reliably indicate when it lacks sufficient information to provide safe medical guidance, instead defaulting to generating plausible-sounding responses that may be clinically inappropriate.

His third concern centers on what he calls "limited usefulness" in clinical decision-making contexts. Through his research into complex cancer treatment decisions, including his late aunt's Stage 4 pancreatic cancer diagnosis, Kiani concluded that the most critical healthcare decisions involve deeply personal, contextual factors that extend beyond clinical data. These include quality-of-life considerations, religious beliefs, family dynamics, and personal values that AI systems cannot meaningfully incorporate into their recommendations.

The technical challenges Kiani encountered extended beyond software development into regulatory and platform restrictions. Apple rejected his iOS applications more than ten times, despite his implementation of HIPAA-compliant backend processing and comprehensive privacy policies. The rejections appear to stem from Apple's reluctance to allow off-device processing of health data, even with explicit user consent and healthcare compliance measures.

Kiani's development process utilized multiple advanced AI integration techniques, including Retrieval-Augmented Generation (RAG), Function Calls, and Model Context Protocols. He worked primarily with Cursor and Claude development tools, allowing him to create functional prototypes within days rather than months. His applications included features for medical record summarization, appointment transcription and analysis, and integration with authoritative medical sources like cancer.org.

The business model challenges for clinical AI applications present additional barriers beyond technical limitations. According to Kiani's analysis, traditional health technology monetization strategies face significant obstacles when applied to AI-powered clinical tools. Direct-to-consumer subscription models struggle with low patient willingness to pay for health tools, particularly when general-purpose AI systems like ChatGPT provide similar capabilities without subscription costs.

Partnership-based models with healthcare providers, insurance companies, or pharmaceutical companies face their own challenges due to the fundamental technical limitations Kiani identified. The inability to guarantee consistent, safe clinical guidance makes enterprise adoption problematic for healthcare organizations concerned about liability and patient safety.

Kiani's decision represents a significant case study in the current limitations of AI technology for clinical applications. His technical background and comprehensive development effort provide insight into challenges that may affect other healthcare AI initiatives. The timing of his announcement, coming as major technology companies continue to invest heavily in healthcare AI applications, highlights ongoing tensions between technological capability and clinical safety requirements.

The healthcare AI market has attracted significant investment and attention from major technology companies. Google, Microsoft, Amazon, and OpenAI have all announced healthcare-focused AI initiatives, while numerous startups continue to develop clinical AI applications. However, Kiani's experience suggests that fundamental technical limitations may constrain the near-term viability of patient-facing clinical AI systems.

For marketing professionals and healthcare technology companies, Kiani's analysis reveals important considerations about AI application development and market positioning. His experience demonstrates that even sophisticated technical implementation cannot overcome fundamental limitations in current AI technology when applied to high-stakes clinical scenarios.

The regulatory environment for healthcare AI applications remains complex and evolving. The Food and Drug Administration has approved certain AI applications for clinical use, but these typically involve narrow, well-defined tasks rather than the broad clinical guidance applications that Kiani attempted to develop. His experience with Apple's App Store review process illustrates additional regulatory and platform policy challenges that healthcare AI developers must navigate.

Kiani's technical analysis also reveals important insights about the gap between AI capability demonstrations and real-world clinical deployment. While current AI systems can perform impressively on medical licensing examinations and clinical case studies, the controlled nature of these assessments may not reflect the complex, incomplete information environment that characterizes actual patient care scenarios.

The announcement comes as healthcare organizations continue to grapple with AI integration strategies. Many health systems have begun implementing AI tools for administrative tasks, diagnostic imaging analysis, and clinical decision support. However, Kiani's experience suggests that patient-facing clinical guidance applications may require more advanced AI capabilities than current technology can provide safely.

Despite abandoning clinical AI applications, Kiani indicated he plans to continue working in healthcare technology, potentially focusing on non-clinical aspects of care navigation such as financial guidance for patients. He also expressed interest in developing educational resources to help technically sophisticated patients and caregivers use existing AI tools more effectively for healthcare research and preparation.

The marketing implications of Kiani's decision extend beyond healthcare technology to broader questions about AI application marketing and positioning. His experience demonstrates the importance of clearly defining the limitations and appropriate use cases for AI applications, particularly in high-stakes domains where incorrect guidance could cause harm.

For healthcare marketing professionals, Kiani's analysis provides insight into patient expectations and concerns about AI-powered health applications. His research revealed that patients often expect AI health tools to provide definitive medical guidance, creating challenges for applications that must balance usefulness with safety by avoiding specific medical advice.

The technical challenges Kiani identified may inform the development strategies of other healthcare AI companies. His experience suggests that current AI technology may be better suited for supporting healthcare professionals rather than providing direct patient guidance, potentially shifting market focus toward clinical decision support tools rather than consumer-facing applications.

Timeline

September 2024: Kiani begins nine-month healthcare AI development project

October-December 2024: Initial prototype development and user research with patients, physicians, and caregivers

November 21, 2024: Google announces Japan will allow online pharmacy and telemedicine prescription drug advertising starting January 2025

December 1, 2024: Policy implementation details confirmed for Japanese healthcare advertising expansion

January-March 2025: Development of web applications with EHR integration and iOS applications

February 9, 2025: Google expands addiction treatment advertising in Canada through LegitScript certification

April-May 2025: Multiple Apple App Store rejections and development of alternative web-based solutions

June 6, 2025: Public announcement of project termination and pivot decision (3 days ago from today's date)

Related Stories

- July 29, 2024: Google allows certified election advertisers to reference opioids in healthcare policy update

- October 31, 2024: Google expands sexual health advertising policy for personal lubricants and prescription drugs

- November 2, 2023: Google updates Healthcare and Medicines advertising policy with prescription drug services changes