EU publishes final General-Purpose AI Code of Practice

Commission receives industry framework as August 2025 compliance deadline approaches.

The European Commission officially received the finalized General-Purpose AI Code of Practice on July 10, 2025, according to documents published by the AI Office. The comprehensive framework, developed through a multi-stakeholder process involving nearly 1,000 participants, establishes voluntary guidelines for AI model providers ahead of mandatory compliance requirements taking effect August 2, 2025.

According to the Model Documentation Form, the three-chapter code addresses transparency, copyright, and safety obligations under Articles 53 and 55 of the AI Act. The Safety and Security chapter applies specifically to general-purpose AI models with systemic risk—those exceeding 10^25 floating-point operations (FLOP) during training or designated by the Commission based on reach and capabilities.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: The European Commission AI Office received the final General-Purpose AI Code of Practice from independent experts and stakeholder working groups representing nearly 1,000 participants including AI providers, civil society organizations, and EU Member States.

What: A three-chapter voluntary compliance framework addressing transparency, copyright, and safety obligations for general-purpose AI model providers under the EU AI Act, including comprehensive documentation requirements, rights reservation protocols, and systemic risk management measures.

When: The Commission officially received the final code on July 10, 2025, with AI Act obligations for general-purpose AI models taking effect August 2, 2025, and enforcement becoming applicable one to two years later depending on model status.

Where: The framework applies to providers placing general-purpose AI models on the European Union market, regardless of provider location, with particular focus on models with systemic risk capabilities and those integrated into downstream AI systems.

Why: The code provides voluntary compliance pathways ahead of mandatory AI Act enforcement, addressing transparency needs for downstream system integration, copyright protection for rightsholders, and systemic risk mitigation for the most advanced AI models that could impact public safety and fundamental rights.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Framework addresses systemic risk management

The safety framework requires providers to adopt "state-of-the-art" risk assessment methodologies and implement comprehensive security measures. According to Measure 1.1 of the Safety chapter, companies must establish trigger points for conducting model evaluations throughout the development lifecycle, not just before market placement.

The documentation shows providers must maintain detailed records of training data provenance, computational resources, and energy consumption. For energy reporting, the framework requires measurements "recorded with at least two significant figures" in megawatt-hours, with methodology disclosure when critical information from compute providers remains unavailable.

Security requirements include protection against "insider threats" and implementation of "confidential computing" using hardware-based trusted execution environments. The framework defines specific mitigation objectives including prevention of unauthorized model parameter access and implementation of multi-factor authentication for personnel accessing model weights.

Transparency measures target downstream integration

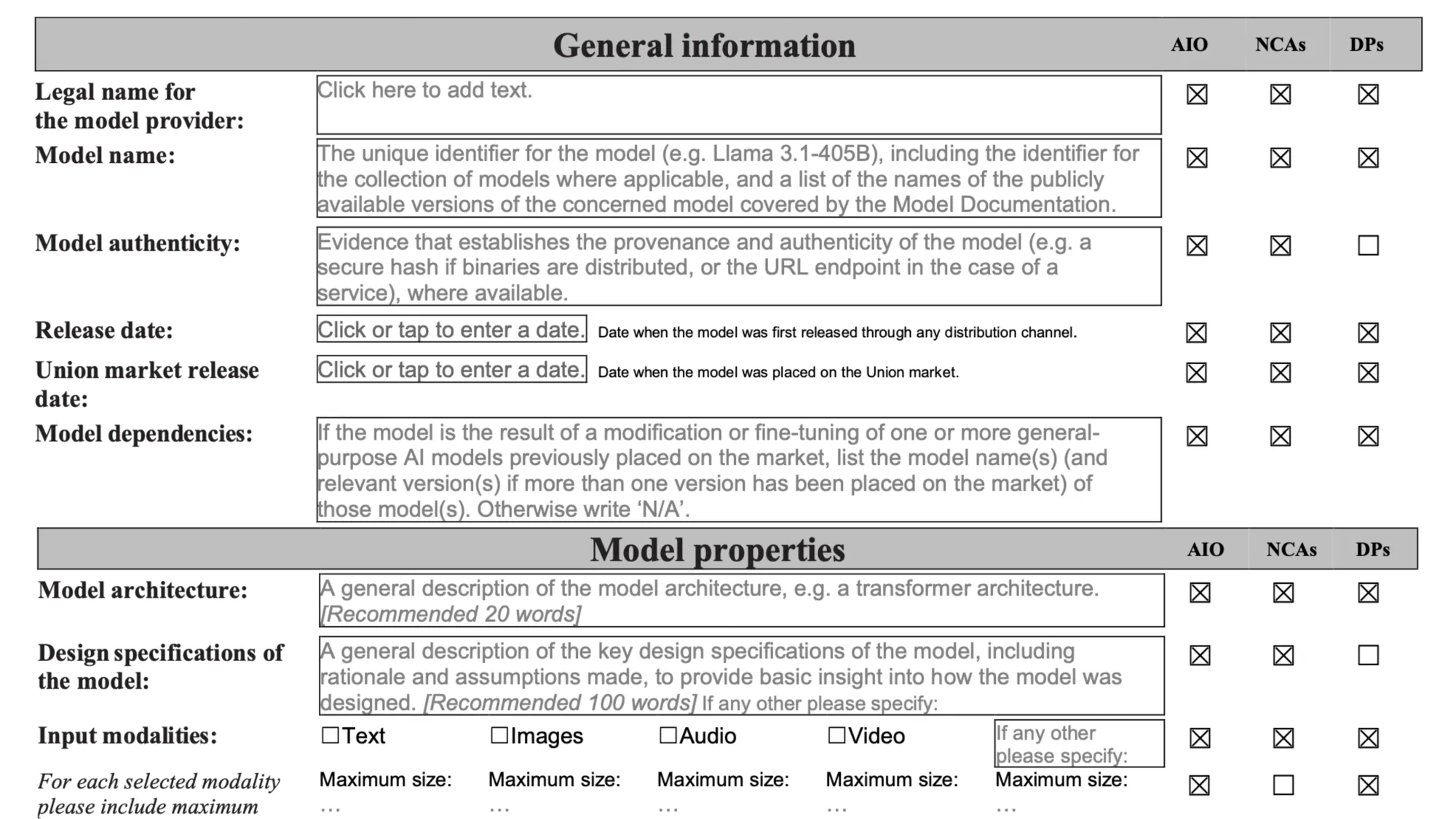

The transparency chapter establishes comprehensive documentation requirements for all general-purpose AI model providers. According to the Model Documentation Form template, companies must disclose model architecture details, training methodologies, and integration specifications to facilitate downstream AI system compliance.

The framework requires disclosure of model input and output modalities, with maximum processing limits where defined. Providers must document "the type and nature of AI systems into which the general-purpose AI model can be integrated," with examples including autonomous systems, conversational assistants, and decision support systems.

Distribution channel information must include access levels—whether weights-level or black-box access—and links to accessibility information. License documentation requirements vary based on recipient, with detailed license categorization required for downstream providers but simplified disclosure for regulatory authorities.

Copyright obligations address rights reservations

The copyright chapter mandates compliance with EU Directive 2019/790 Article 4(3) regarding rights reservations. According to Measure 1.3, providers must employ web crawlers that "read and follow instructions expressed in accordance with the Robot Exclusion Protocol (robots.txt)" as specified in Internet Engineering Task Force Request for Comments No. 9309.

The framework requires identification of "other appropriate machine-readable protocols to express rights reservations" that have been adopted by international standardization organizations or represent state-of-the-art implementations. Providers must implement "proportionate technical safeguards to prevent their models from generating outputs that reproduce training content protected by Union law on copyright and related rights in an infringing manner."

Companies must establish complaint mechanisms allowing rightsholders to submit "sufficiently precise and adequately substantiated complaints concerning non-compliance" with copyright commitments. The framework requires responses within "reasonable time" unless complaints are "manifestly unfounded" or identical to previously addressed issues.

Industry response reflects compliance urgency

The code's completion timeline reflects the compressed development schedule mandated by Article 56 of the AI Act, which required finalization by May 2, 2025. According to the timeline documentation, the process included three drafting rounds with working group meetings and provider workshops between October 2024 and April 2025.

Working Group 1 addressed transparency and copyright under chairs Nuria Oliver (ELLIS Alicante Foundation) and Alexander Peukert (Goethe University Frankfurt). Working Groups 2-4 focused on risk identification, technical mitigation, and governance under chairs including Yoshua Bengio (Université de Montréal) and Marietje Schaake (Stanford University).

The Q&A document indicates the AI Office will offer collaborative implementation support during the first year after August 2, 2025. According to the guidance, providers adhering to the code who "do not fully implement all commitments immediately after signing" will be considered acting "in good faith" rather than violating AI Act obligations.

Enforcement framework takes phased approach

The AI Act's enforcement timeline establishes a graduated implementation schedule. According to the Q&A document, rules become enforceable by the AI Office "one year later as regards new models and two years later as regards existing models" placed on the market before August 2025.

The framework includes exemptions for models released under free and open-source licenses meeting Article 53(2) conditions, though these exemptions do not apply to general-purpose AI models with systemic risk. The documentation indicates transitional provisions require existing model providers to take "necessary steps" for compliance by August 2, 2027.

Member States and the Commission will assess the code's adequacy in coming weeks, with potential approval through implementing act providing "general validity within the Union." The AI Office retains authority to develop common implementation rules if the code proves inadequate or cannot be finalized by required deadlines.

Marketing industry implications

The framework's transparency requirements create new information flows between AI model providers and downstream system developers. For marketing technology companies integrating general-purpose AI models, the documentation requirements facilitate assessment of model capabilities and limitations relevant to advertising applications.

According to recent PPC Land analysis, 68% of marketers plan to increase social media spending in 2025, with generative AI emerging as the leading consumer technology trend. The code's safety and transparency measures may influence how AI-powered advertising tools integrate with major platforms.

The framework's copyright provisions address content generation concerns relevant to marketing applications. Requirements for technical safeguards preventing copyright-infringing outputs could affect how AI tools assist with creative content development across advertising campaigns.

For agencies and marketing technology providers, the documentation requirements create opportunities to better understand model capabilities when selecting AI tools for campaign optimization, content generation, and audience targeting applications.

Timeline

- July 30, 2024 - AI Office launches call for Code of Practice participation

- September 30, 2024 - Kick-off plenary with nearly 1,000 participants

- December 11, 2024 - Second draft published with EU survey launch

- March 11, 2025 - Third draft released following working group meetings

- July 10, 2025 - Commission receives final Code of Practice

- August 2, 2025 - AI Act rules for general-purpose AI models take effect

- August 2026-2027 - Enforcement becomes applicable for new and existing models

Related Stories