Dutch regulator publishes responses on human oversight in AI decision-making

Dutch data authority releases feedback summary from consultation on meaningful human intervention requirements, revealing complex implementation challenges across organizations.

The Dutch Data Protection Authority (AP) has published a comprehensive summary of responses to its consultation on meaningful human intervention in algorithmic decision-making, marking the latest development in European efforts to regulate automated systems under GDPR Article 22. The 5-page document, released on June 3, 2025, compiles feedback from government institutions, independent foundations, companies, industry organizations, and researchers without identifying specific contributors.

Get the PPC Land newsletter ✉️ for more like this

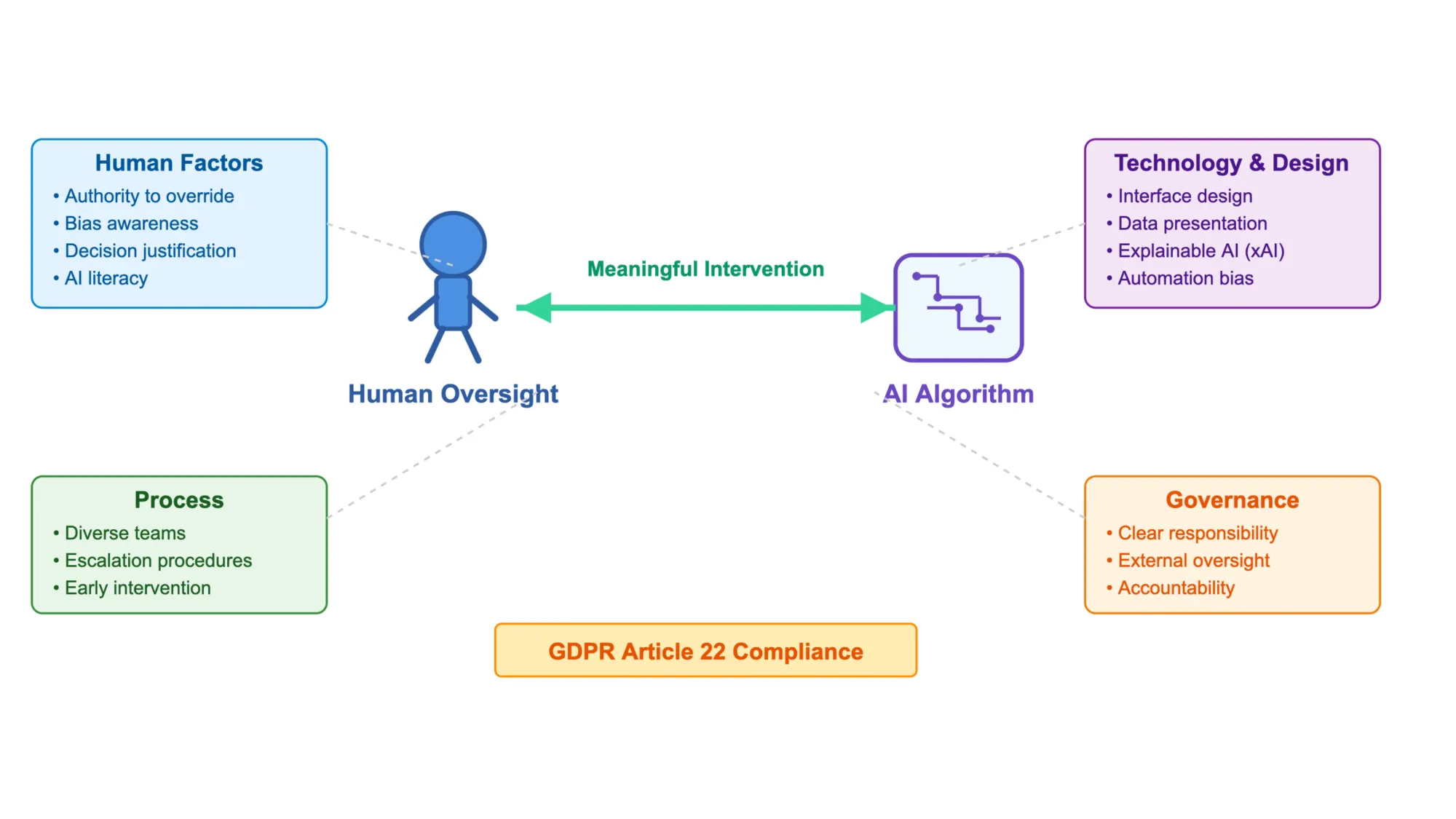

The consultation, which began earlier this year, sought practical input on guidelines for implementing meaningful human oversight in automated decision-making processes. Organizations across sectors have increasingly deployed algorithms for evaluating credit applications, screening job candidates, and making other decisions that significantly affect individuals. Under GDPR Article 22, people have the right to meaningful human intervention when automated systems make decisions about them.

According to the document, "verschillende respondenten vroegen aandacht voor andere kernbegrippen in Art. 22 AVG, bijvoorbeeld wat een 'besluit' precies is en wanneer een besluit iemand 'in aanmerkelijke mate treft'" (several respondents requested attention to other core concepts in Article 22 GDPR, such as what exactly constitutes a "decision" and when a decision "significantly affects" someone). The responses reveal substantial confusion about fundamental GDPR concepts beyond human intervention itself.

The feedback highlights complex technical challenges in implementing meaningful oversight. Respondents emphasized that meaningful human intervention becomes impossible when algorithms rely on incomprehensible data and processes. This particularly affects machine learning systems that identify complex patterns in data. Organizations expressed concerns about how to handle different algorithmic outputs beyond numerical scores, including images, texts, and various scenarios.

Several respondents noted the availability of Explainable AI (xAI) techniques that can provide explanations for generated outputs. According to the summary, these techniques should receive mention in future guidance documents. Organizations also requested more information about which types of data are better suited for human assessment versus algorithmic decision-making.

The consultation responses identified multiple forms of bias affecting both assessors and algorithms. Organizations reported challenges with automation bias, where humans overestimate algorithm performance and accuracy. The feedback also highlighted selective automation bias, where assessors follow algorithmic recommendations for certain groups while relying on human judgment for others. Additional concerns included overreliance and selection bias affecting decision-making processes.

Respondents emphasized specific cognitive problems that interfere with meaningful human oversight. The feedback specifically mentioned the WYSIATI (What-You-See-Is-All-There-Is) problem, which causes people to focus predominantly on visible information while ignoring unknown or forgotten elements. This narrow focus can result in hasty and potentially erroneous conclusions.

The responses revealed substantial concerns about responsibility distribution in algorithmic decision-making. Organizations questioned how to prevent entire decision-making responsibility from falling on individual assessors. Multiple respondents warned against shifting accountability to individual evaluators while broader organizational choices drive algorithmic errors. The feedback noted that assessor involvement can legitimize systems while simultaneously making them less accountable.

According to the document, "respondenten benoemen dat verwerkingsverantwoordelijkheden ook rekening dienen te houden met extern toezicht" (respondents note that processing responsibilities must also account for external oversight). Organizations need systems for recording processes and data management to demonstrate how decisions were reached and fulfill accountability obligations.

Technical interface design emerged as a critical factor in meaningful human intervention. Respondents emphasized that organizations must not only consider which data to show assessors and how, but also explain why specific data and presentation methods were chosen. This helps assessors better understand the information they evaluate.

The feedback underscored the importance of specific interface features including the ability to correct or remove data, access to comprehensive information, encouragement of human input, and avoidance of design elements that prompt automatic acceptance of algorithmic outputs. Organizations also suggested additional measures to prevent routine approval of algorithmic recommendations, such as presenting mixed results and implementing control questions.

Several responses addressed the relationship between external developers and organizations implementing algorithmic systems. Organizations questioned whether they have authority to impose specific requirements on system development or whether they remain subject to external developer decisions that might limit meaningful human intervention possibilities.

The consultation responses revealed significant interest in process-level considerations beyond individual assessments. Respondents endorsed the importance of diverse teams and inclusive workplaces for fair algorithmic decision-making processes. Some suggested that human intervention should occur earlier in the process rather than only at the final decision stage.

Organizations requested clarification on escalation procedures, including specific steps assessors can take when they have doubts about algorithms or outcomes. The feedback emphasized the need for clear documentation of when assessors disagree with algorithmic recommendations.

Multiple respondents highlighted the role that Data Protection Officers (DPOs) can play in internal oversight of meaningful human intervention in algorithmic decision-making. Organizations noted that different legal frameworks apply to public and private organizations, with potential implications for human intervention requirements.

The responses consistently emphasized ethical considerations in algorithmic decision-making beyond GDPR compliance. Organizations questioned whether algorithms represent appropriate tools for specific decision-making processes. Transparency concerns featured prominently, with some algorithms described as virtually impossible to follow and therefore insufficiently transparent for assessors.

According to the feedback, the legal status of the final guidance document requires clarification. Organizations need clear understanding of whether the guidance carries regulatory force or serves as advisory material. The consultation summary indicates that the AP will use collected input to write an updated version of the meaningful human intervention guidance, scheduled for publication later this year.

The consultation process demonstrates the complex intersection of data protection law, artificial intelligence, and organizational decision-making. As automated systems become more sophisticated and widespread, regulatory authorities face increasing pressure to provide practical guidance that balances innovation with individual rights protection.

The timing of this consultation aligns with broader European regulatory efforts addressing artificial intelligence. The EU AI Act, which takes effect in 2025, will establish comprehensive oversight requirements for foundation models and their developers. This regulatory landscape creates additional compliance considerations for organizations implementing algorithmic decision-making systems.

For marketing professionals, these developments signal significant implications for customer acquisition, segmentation, and automated campaign optimization systems. Organizations using algorithmic tools for advertising targeting, customer scoring, or automated bidding must now consider how to implement meaningful human oversight in these processes. The guidance could affect how marketing technology platforms design their interfaces and decision-making workflows.

The emphasis on transparency and explainability in the consultation responses suggests that marketing teams will need greater visibility into how algorithmic systems make targeting and optimization decisions. This could impact everything from programmatic advertising platforms to customer relationship management systems that use automated scoring or recommendation engines.

The feedback's focus on bias prevention has particular relevance for marketing applications, where algorithmic systems often make decisions about which audiences see specific advertisements or promotional offers. Organizations may need to implement additional oversight mechanisms to ensure their marketing algorithms do not inadvertently discriminate against protected groups or perpetuate existing biases.

Timeline

- May 2025: Dutch Data Protection Authority publishes summary of consultation responses on meaningful human intervention

- June 3, 2025: AP releases comprehensive feedback document highlighting implementation challenges (5 days ago)

- March 27, 2025: Initial consultation guidance published seeking stakeholder input on practical implementation

- Later 2025: Updated guidance document expected based on consultation feedback

Related Stories

- May 23, 2025: Dutch Data Protection Authority releases comprehensive consultation on GDPR preconditions for generative AI

- April 13, 2025: Irish DPC launches Grok LLM training inquiry investigating X's use of EU user data for AI training

- March 27, 2025: Dutch regulator seeks input on meaningful human intervention in algorithmic decisions

- February 3, 2025: Belgian data protection case moves forward against DPG Media

- January 27, 2025: Advocate General sides with WhatsApp in landmark EDPB accountability case