AI content scraping controls evolve as tech giants respond to publisher concerns

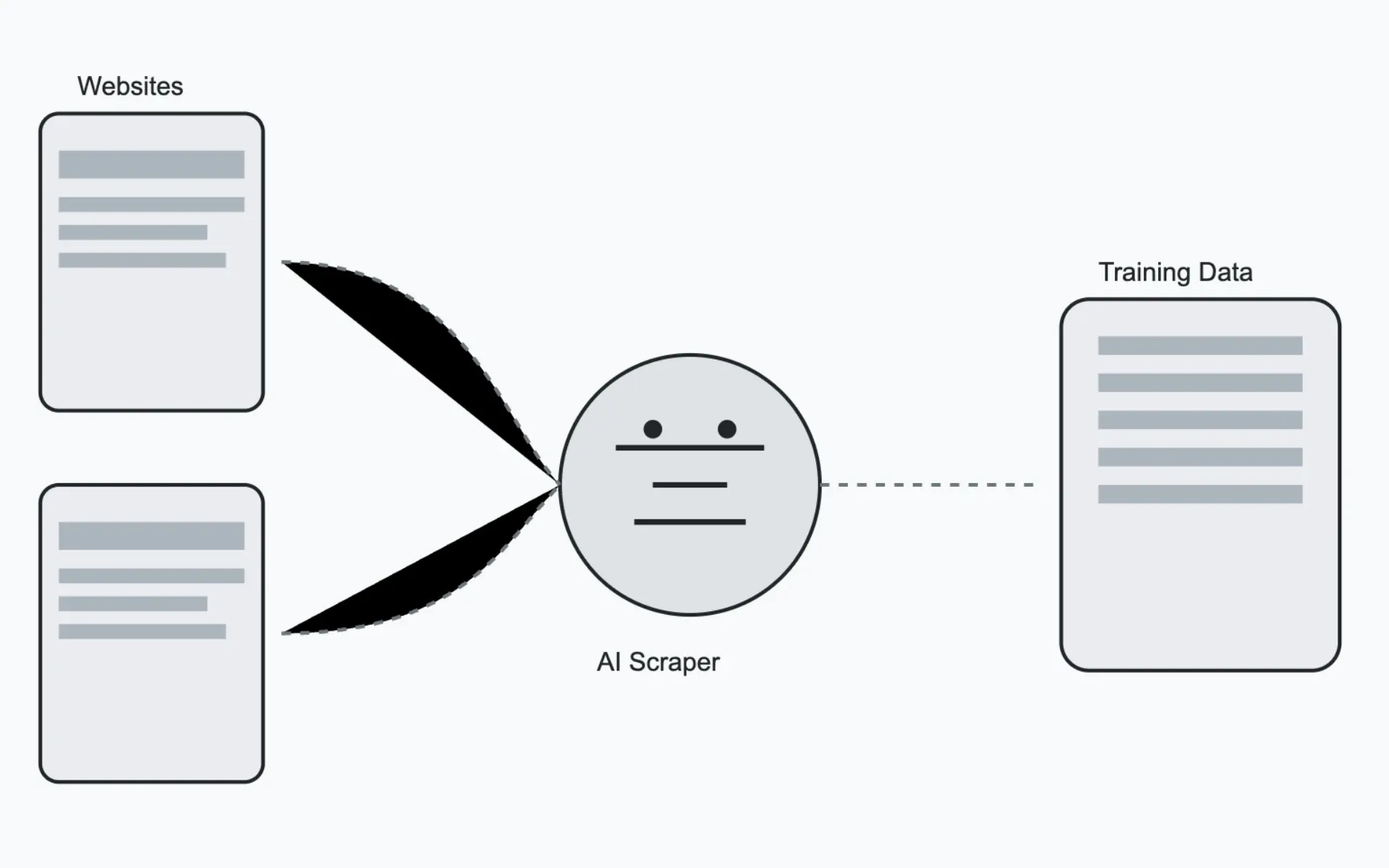

Latest developments in AI scraping protection tools from major tech companies, as content creators seek control over training data usage.

In a significant development for digital publishers and content creators, major technology companies are rolling out new tools to address concerns about unauthorized AI content scraping. According to recent announcements made in September and June 2024, both Cloudflare and HUMAN Security have introduced sophisticated solutions that give publishers unprecedented control over how artificial intelligence systems access and utilize their content.

Cloudflare unveiled its AI Audit tool on September 23, 2024, marking a pivotal moment in the ongoing debate about AI training data rights. According to Cloudflare CEO Matthew Prince, websites are experiencing thousands of daily AI bot visits, creating significant operational and economic challenges for content creators. The new tool provides comprehensive analytics and control mechanisms, allowing publishers to monitor and manage AI model access to their content.

HUMAN Security, a major player in cybersecurity, has documented a 107% year-over-year increase in scraping attacks. According to Robert Conrad, Head of Engineering at Crunchbase, the impact of uncontrolled AI scraping extends beyond simple content theft to include performance issues and resource strain. Crunchbase implemented HUMAN's Scraping Defense solution to address these challenges while maintaining legitimate bot access.

The technical capabilities of these new tools reflect the complexity of the AI scraping challenge. Cloudflare's AI Audit provides granular analytics showing why, when, and how often AI models access a website. HUMAN's solution employs over 400 algorithms and adaptive machine learning models to analyze 2,500+ signals per interaction, processing more than 20 trillion digital interactions weekly across 3 billion unique devices.

The emergence of these tools comes amid growing tensions between content creators and AI companies. Earlier in 2024, AI-powered search startup Perplexity faced criticism for allegedly scraping websites that had explicitly blocked crawling through robots.txt files. This incident highlighted the limitations of traditional content protection methods and the need for more sophisticated solutions.

The economic implications of AI scraping are substantial. Some website owners report scraping activity so intense it resembles distributed denial-of-service (DDoS) attacks, leading to increased cloud computing costs and degraded service performance. While major publishers have secured licensing agreements with companies like OpenAI, smaller content creators often lack protection or compensation mechanisms.

Looking ahead, both companies have announced plans for expanded capabilities. Cloudflare intends to launch a marketplace where website owners can set pricing for AI companies seeking to access their content. This development, scheduled for discussion at their Builder Day Live Stream event on September 26, 2024, could create a standardized framework for content licensing in the AI era.

HUMAN Security's approach focuses on immediate protection through advanced detection and mitigation strategies. Their system includes:

- Precheck mechanisms to block scraping bots at the network edge

- Behavioral analysis and intelligent fingerprinting

- Customer-specific mitigation policies

- Detailed traffic analysis and incident investigation tools

The technical implementation of these solutions involves sophisticated machine learning models and multiple layers of protection. HUMAN's system examines hundreds of non-personally identifiable client-side indicators to distinguish between legitimate and malicious bot activity. Cloudflare's tools provide automated control mechanisms that can block all AI scraping with a single click while maintaining granular control over access permissions.

Industry response to these developments has been significant. According to multiple cybersecurity experts, the introduction of these tools represents a crucial step toward balancing AI innovation with content creators' rights. The solutions address not only content protection but also performance optimization and resource management concerns.

Key Facts

- Cloudflare AI Audit announcement date: September 23, 2024

- HUMAN Security reports 107% increase in scraping attacks year-over-year

- System processes 20 trillion weekly digital interactions

- Coverage extends across 3 billion unique devices

- Analysis involves 2,500+ signals per interaction

- Implementation of 400+ algorithms for detection

- Response time measured in milliseconds