1,000+ Meta staff process 61.4M content actions under EU's Digital Services Act in 2024

Meta details DSA compliance data showing 90% audit success rate across Facebook and Instagram with 529.7M EU users.

Meta released its comprehensive Digital Services Act (DSA) transparency report on November 28, 2024, revealing detailed insights into content moderation and platform safety measures across Facebook and Instagram in the European Union. The report covers operations from April to September 2024, providing unprecedented transparency into the company's compliance efforts.

In 2023, Meta assembled a cross-functional team of over 1,000 professionals to develop DSA compliance solutions. A dedicated group of more than 40 specialists supported the independent audit process, investing over 20,000 hours in documentation, information requests, and auditor meetings. This thorough approach yielded strong results, with Meta achieving full compliance in more than 90% of audited areas and receiving no "adverse" conclusions.

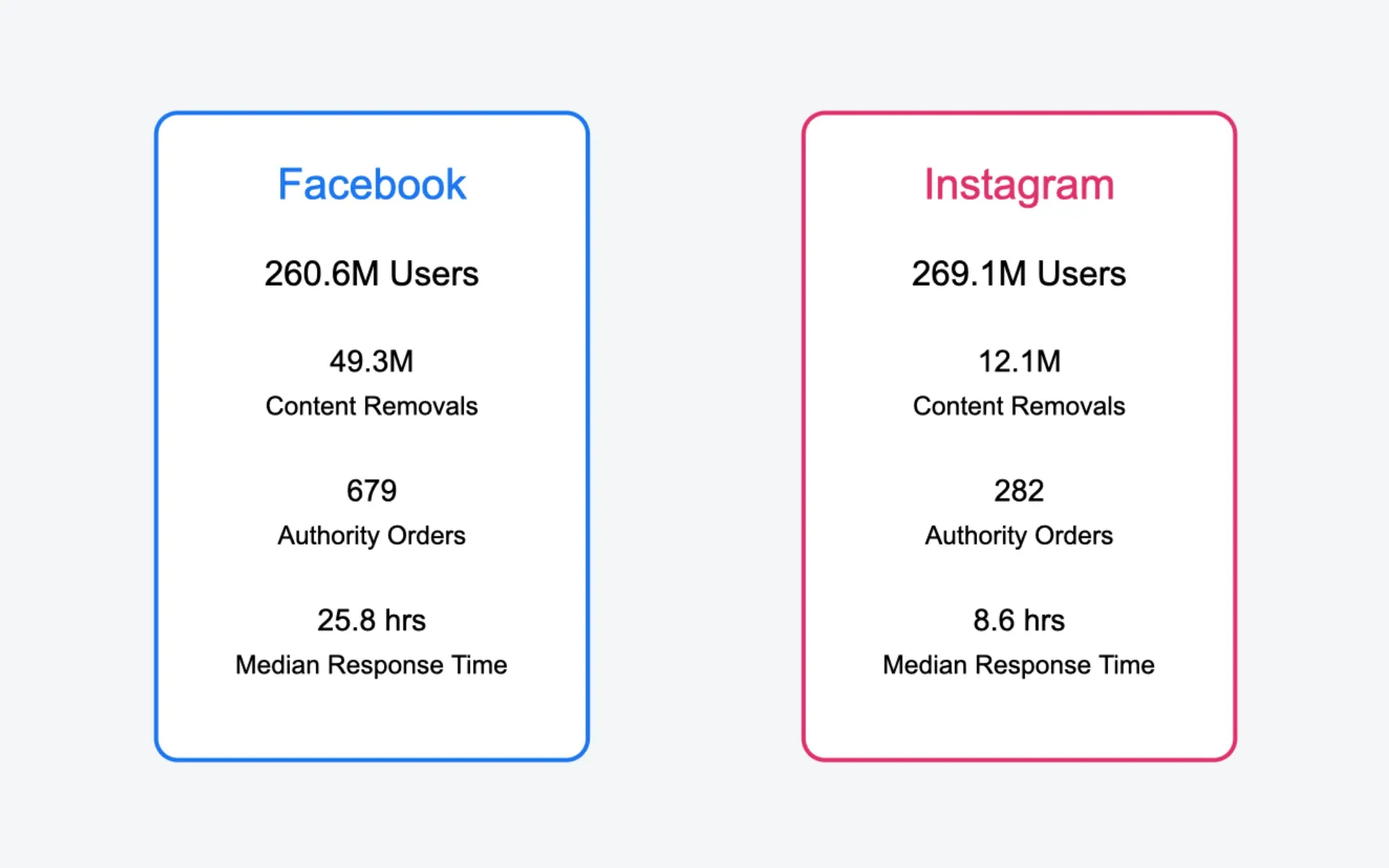

The scale of content moderation across both platforms is substantial. Facebook's systems processed approximately 49.3 million content removals in the EU during the reporting period, with automated tools handling 46.7 million cases. Instagram's moderation efforts resulted in about 12.1 million removals, with automation managing 10.5 million instances.

Platform usage data reveals significant reach across the EU. Facebook maintains approximately 260.6 million monthly active users, while Instagram serves about 269.1 million users. In Facebook's user base, Germany accounts for 32.9 million monthly active users, with France following at 42.3 million. Instagram shows particularly strong engagement in Germany with 45 million monthly active users, while France maintains 41.5 million users.

Content moderation actions span various violation categories. Hate speech removals reached 1.15 million instances on Facebook and 1.45 million on Instagram. The platforms processed different volumes of authority orders from EU member states, with Facebook handling 679 orders and Instagram processing 282 orders related to illegal content.

Meta's linguistic capabilities encompass all 24 official EU languages, with specialized teams for regional requirements. The company maintains 540 French-language content reviewers, 406 German-language reviewers, and 2,325 Spanish-language reviewers, demonstrating significant investment in regional expertise.

Response times indicate efficient moderation processes. Facebook achieves a median response time of 25.8 hours for authority orders regarding illegal content, while Instagram processes similar requests within 8.6 hours. Both platforms maintain an automation overturn rate of 7.47%, suggesting consistent accuracy in their automated systems.

User engagement with moderation decisions shows active participation. Facebook processed over 3.5 million appeals regarding content removals, resulting in approximately 871,759 content restorations. Instagram handled more than 1.5 million appeals, leading to about 268,853 content restorations.

The content moderation infrastructure operates through three primary mechanisms: rate limits to prevent bot activity, matching technology for identifying previously flagged content, and artificial intelligence systems for detecting potential violations. This technological framework is supported by comprehensive human oversight.

Meta's support system for content moderators includes extensive measures such as 24/7 independent support programs, individual and group counseling sessions, and various clinical services, acknowledging the challenging nature of content moderation work.

Key Facts

- Over 1,000 staff dedicated to DSA compliance

- 40+ specialists supporting audit process

- 20,000 hours invested in audit documentation

- 90% compliance rate with DSA requirements

- 260.6 million monthly active Facebook users in EU

- 269.1 million monthly active Instagram users in EU

- 7.47% automation overturn rate for content moderation

- 25.8 hours median response time for Facebook authority orders

- 8.6 hours median response time for Instagram authority orders

- 679 authority orders processed on Facebook

- 282 authority orders processed on Instagram

- Coverage in all 24 official EU languages

- 3.5 million user appeals processed on Facebook

- 1.5 million user appeals processed on Instagram